Greetings, computer vision practitioners👮! I hope you're all doing well in your projects. Recently, I received a new requirement from a client regarding an object detection solution that had been successfully deployed as a REST API. The solution was seamlessly integrated into both web and mobile applications. However, the client encountered network reliability issues, particularly in rural areas where poor connectivity occasionally caused the solution to fail on mobile devices💔.

As we know, network disruptions are a common challenge, but they become critical when they impact business operations. My client sought a robust solution to address this issue, ensuring uninterrupted functionality regardless of network conditions. In this blog post, we will explore how I tackled this challenge and implemented an effective solution💓.

The Solution: Enabling Offline Object Detection for Mobile Devices

Given that network connectivity issues are beyond our control, the most effective solution I proposed was to implement an offline model directly within the mobile application💥. Instead of relying on a cloud-based or on-premise Rest API, the object detection model would run natively on the device, allowing it to function seamlessly without an internet connection💣.

Sounds interesting💁? Absolutely! Since my client was using a Darknet/YOLOv4-based model, the next step was to convert it into a format optimized for offline deployment on mobile devices. For both Android and iOS, the most suitable format is TensorFlow Lite (TFLite), previously known as LiteRT. In the next section, we will dive into the process of converting the YOLOv4 model to TFLite and integrating it into the mobile application💪.

Converting Darknet/YOLOv4 Weights to TFLite Format:-

In this section, we will explore the step-by-step process of successfully converting Darknet/YOLOv4 weights to TFLite format😎. For demonstration purposes, I will be using a Kaggle Notebook, but you are free to use any environment of your choice for the conversion.

It is important to note that Darknet/YOLOv4 weights cannot be directly converted to TFLite (formerly LiteRT). The conversion process involves an intermediate step:

1. First, we convert the Darknet model to the ONNX format, which is an open standard for machine learning model interoperability.

2. Then, we convert the ONNX model to TFLite, making it optimized for mobile deployment.

To begin the conversion, we will clone the required GitHub repository (and if you find it useful, don’t forget to give it a star ⭐ on GitHub!). Let’s get started!-

After installing the above package, we will run the following conversion command from the yolov4_pytorch directory-

Understanding the ONNX Conversion Command:-

In the command above, I have specified the paths for the Darknet/YOLOv4 configuration file, object names file, and weight file, along with an example image. This image is used to visualize the object detection results by drawing bounding boxes after converting the model to the ONNX format:

Customizing and Verifying the ONNX Conversion:-

You can replace the specified configuration, object names, and weight files with your own as needed. Upon executing the command, the conversion process begins, and the output image is saved with predicted bounding boxes using the ONNX model💫. This allows us to verify whether there are any discrepancies between the original Darknet/YOLOv4 model and the converted ONNX model.

At the end of the logs, you will see messages similar to the following:

As seen in the logs above, the converted ONNX model successfully detects and predicts objects such as the bicycle, truck, and dog, confirming that the conversion process was accurate💘.

Now, we can proceed with converting the ONNX model to TFLite format. For this step, we will utilize a highly efficient GitHub repository specifically designed for ONNX-to-TFLite conversion. I highly recommend giving this repository a ⭐ on GitHub, as it provides a seamless and reliable way to convert almost any ONNX model into TFLite format. Let’s dive into the conversion process!

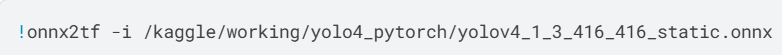

Next, we will run the following command to start the tflite conversion-

Executing the ONNX to TFLite Conversion:-

In the command above, we simply need to specify the path to the ONNX model. Once executed, the script will handle all the complex computations required for the conversion. Upon completion, it will generate Float16 and Float32 TFLite models, which will be stored inside the saved_model folder.

Model Size and Verifying Predictions:-

If you check the size of the Float16 TFLite model, it is approximately 11 MB, significantly smaller than the original YOLOv4-Tiny model, which is 23 MB💥. This reduction in size is crucial, as it ensures efficient deployment within a mobile application.

Next, we need to verify whether the converted TFLite model produces the same predictions as the original model. To do this, we can either write a Python inference script or collaborate with an Android developer to implement it directly within the mobile application👷.

Below, I am sharing my Python script for running inference with the converted model.

We can use the OpenCV library to load the tflite model directly as below-

Post-Processing and Displaying TFLite Model Output:-

After applying essential post-processing techniques, such as Non-Maximum Suppression (NMS) to eliminate redundant bounding boxes, we can visualize the final output of the TFLite model on the given image, as shown below.

We can now confirm that the converted TFLite model produces predictions identical to those of the original model👊. Additionally, since this TFLite model is in NHWC format, which is the required format for Android development, it can be seamlessly integrated into mobile applications. With the model ready, we can hand it over to the mobile developer, who can effortlessly import it into Android Studio for further app development and deployment👌.

That’s all for today, everyone! In this blog post, we successfully walked through the correct process of converting Darknet/YOLOv4 weights to TFLite format, enabling seamless integration into mobile applications as an offline model. This skill not only enhances your expertise but also strengthens your capabilities as a computer vision practitioner👲.

Now, it's your turn! Use my Kaggle Notebook to convert your custom-trained YOLOv4 model to TFLite format and deploy it within a mobile application to demonstrate your computer vision expertise😇.

In the next post, we will explore the YOLOv7 model conversion to TFLite. Until then, keep pushing forward, chase your dreams, and make every second count! 🚀 See you in the next one!

Very useful information! For more insights and detailed learning resources, explore the Full Stack Data Science Online Training. Thank you

ReplyDeleteData Science Training course UK

ReplyDeleteYour input has been incredibly helpful.

Thanks so much for the update.