Another post starts with you beautiful people!

XGBoost is an optimized distributed gradient boosting library designed to be highly efficient, flexible and portable. It implements machine learning algorithms under the Gradient Boosting framework. XGBoost provides a parallel tree boosting (also known as GBDT, GBM) that solve many data science problems in a fast and accurate way. Recently XGBoost is released with it's newer version 1.0.0 which has improvements like performance scaling for multi core CPUs, improved installation experience on Mac OSX, availability of distributed XGBoost on Kubernates etc. In this post we are going to explore it's multi processing capabilities on a real world ml problem Otto Group Product Classification Challenge. In the end of the post I will share my kaggle kernel link also so that you can explore my complete code.

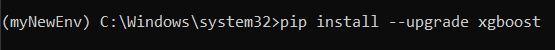

Once you go to the challenge link in Kaggle and start your kernel, first you need to enable the Internet option in the notebook since current version of XGBoost installed in kernel notebook is 0.90. So for upgrading it run the following command in your anaconda prompt-

pip install --upgrade xgboost or !pip install --upgrade xgboost in your kaggle kernel. Screenshot of Anaconda prompt is as below-

And screen shot of my kernel is as below-

After running above command you will see following screen showing successful installation of the library-

Let's import the dataset-

This dataset describes the 95 details of 61,878 products grouped into 10 product categories (e.g. fashion, electronics, etc.). Input attributes are counts of different events of some kind. The goal is to make predictions for new products as an array of probabilities for each of the 10 categories and models are evaluated using multi-class logarithmic loss (also called cross entropy).

Please note that we get multi-threading support by default with XGBoost. But depending on our Python environment (e.g. Python 3) we may need to explicitly enable multi-threading support for XGBoost. We can confirm that XGBoost multi-threading support is working fine by building a

number of different XGBoost models, specifying the number of threads and timing how long it takes to build each model. The trend will both show us that multi-threading support is enabled and give us an indication of the effect it has when building models. Below is the code snippet showing how can you check this-

You can update the number of threads list based on your system configuration. Recommended way to set number of threads (nthread) is it should be equal to the number of physical CPU cores in your machine. After running the above code cell I am getting following result-

Also we can plot the above trend in following way-

From above plot we can see a nice trend in the decrease in execution time as the number of threads is increased. You can run the same code on a machine with a lot more cores and decrease the model training time. Now you know how to configure the number of threads with XGBoost in your machine. But there is one more important thing we can do as tuning. We always do cross validation to avoid overfiting in our model and this steps also time consuming. So is there any way to tune this process. Answer is absolutely yes! We can enable the multi-threading in both XGBoost as well as in cross validation.

The k-fold cross-validation also supports multi-threading. For example the n_jobs argument on the cross val score() function allows us to specify the number of parallel jobs to run. By default, this is set to 1, but can be set to -1 to use all of the CPU cores on our system. In following code snippet we will check three configurations with cross validation and XGBoost to achieve multi-threading and then compare the output of all three configurations-

In above code snippet you can see only configurable parameter required for multi-threading is nthread in XGBoost and is n_jobs in cross validation. For example only I am using 1 thread but you can change it to your no. of cores. After running above cell the best result is achieved by enabling multi-threading within XGBoost and not in cross-validation as you can see in below screen shot also-

So if you are going to to do cross validation with any library other than XGBoost 1.0.0, don't forget to enable the multi-treading feature in cross validation and if you are going to use XGBoost which you should be, don't forget to check no. of threads and enabling multi-threading within XGBoost. For the complete solution, you can find my kernel in following link: my kernel

Fork my kernel and start experimenting with it and if you like to learn more about XGBoost, follow the tutorials of this amazing guy: Jason Brownlee PhD.Till then Go chase your dreams, have an awesome day, make every second count and see you later in my next post.

Hi there,I enjoy reading through your article post. Thank you for sharing.

ReplyDeletePython Online Training in Hyderabad

Python Institute in Hyderabad

Python Course in Hyderabad

Okay then...

ReplyDeleteWhat I'm going to tell you might sound pretty weird, and maybe even kind of "strange"

HOW would you like it if you could just press "PLAY" to LISTEN to a short, "miracle tone"...

And miraculously attract MORE MONEY into your LIFE??

And I'm really talking about hundreds... even thousands of dollars!!

Sound too EASY?? Think something like this is not for real?!?

Well then, Let me tell you the news..

Usually the largest blessings in life are also the EASIEST!!

Honestly, I will PROVE it to you by letting you listen to a REAL "magical money tone" I've produced...

You just click "PLAY" and watch money coming right into your life... starting pretty much right away...

GO here now to experience the marvelous "Miracle Money Tone" - as my gift to you!!

Best Data Science Course In Noida

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteI have been researching on this concept now i find useful information. thanks for giving clear info. looking forward for more posts from this author

ReplyDeletedata science course chennai

This video helps me to understand Matplotlib whats your opinion guys.

ReplyDeleteIt is very useful for me. Thanks...

ReplyDeleteAzure Data Factory Online Training

Azure Data Factory Online Training