A new post starts with you beautiful people and a very Happy New Year to all! It was quite a fantastic last year with respect to learning many new things in Data Science and I was very happy to see that many aspiring data scientists like you had contacted me through my facebook page. It was an honor for me that I was able to solve your queries and motivated many of you!

This year also I will continue my posts about my learning and will share with you all so don't stop, stay positive, keep practicing and try again and again :)

In this post I am going to share nuts and bolts of handling a natural language processing (NLP) task which I had learnt while I was working for a Singapore client. In that project I scraped the different e-commerce merchants' product related data through the apis, web scraping and then I performed text cleaning before applying a machine learning algorithm to classify the product categories. Such kind of task you may encounter in your life. So let's starts our journey by first understanding some important fundamentals of NLP.

You must aware of a fact that a word or a sentence has a very strong role in this world; since both can affect a human being in different ways. So teaching a machine to understand a human language is currently a very fast growing field; you can take an example of Amazon Alexa. NLP is everywhere whether it is - translation, named entity recognition, relationship extraction, sentiment analysis, speech recognition or topic segmentation. You can see NLP as a field focused on making sense of language. Let's understand key terms and their meaning before jumping into Python coding part-

Corpus:- Corpus is a large collection of texts. It is a body of written or spoken material upon which a linguistic analysis is based. The plural form of corpus is corpora. There are various in built corpora present in NLTK so in most of your task you just need to download them using the nltk.download() command in your anaconda prompt. You can see a list of in built corpora in this link also.

Lexicon:- Lexicon is a vocabulary, a list of words, a dictionary for example: an English dictionary. In NLTK, any lexicon is considered a corpus since a list of words is also a body of text. For example: To a financial investor, the first meaning for the word "Bull" is someone who is confident about the market, as compared to the common English lexicon, where the first meaning for the word "Bull" is an animal. As such, there is a special lexicon for financial investors, doctors, children, mechanics, and so on.

Stop Words:- StopWords is a list of words those are meaningless or have a very rare contribution in text analysis process. For example many people use 'hmmmm' or 'ummmm' in text messages frequently which don't have any meaning in English grammar so such words should be excluded from the processed text. This can be done easily by accessing it via the NLTK corpus: from nltk.corpus import stopwords.

Stemming:- Stemming is a sort of normalizing method. In documents you may find that many variations of words carry the same meaning, other than when tense is involved. For example sentences- 'I was taking a ride in the car.' and 'I was riding in the car.' have the same meaning and a machine should also understand this diffrentiation thus we do stemming. Most widely used stemming algorithm in NLP is Porter stemmer which you can use as from nltk.stem import PorterStemmer.

Lemmatizing:- Lemmatizing is very similar operation to stemming. The major difference between these is- stemming can often create non-existent words, whereas lemmas are actual words. Lemmatization normally aiming to remove inflectional endings only and to return the base or dictionary form of a word, which is known as the lemma . You can use lemmatizing as WordNetLemmatizer from nltk.stem package.

Tokenization:- Since a machine does not have any understand of alphabets, grammar, punctuation etc; it cannot understand a raw rext. You need to divide a raw text into small chunks or tokens. This text breaking process is known as Tokenization. These tokens may be words or number or punctuation mark. One challenge in this process is that it depends on the type of language thus it generates different result for different languages. For example, languages such as English and French are referred to as space-delimited as most of the words are separated from each other by white spaces. Languages such as Chinese and Thai are referred to as unsegmented as words do not have clear boundaries. In Python the most common and useful toolkit for handling NLP is nltk.

We can tokenize a string to split off punctuation other than periods using the word_tokenize package and we can tokenize a document into sentences using the sent_tokenize package from the nltk.tokenize library. There is also a special class for tokenizing the tweeter's tweets named as TweetTokenizer in nltk.tokenize library. I recommend you to once visit following link to understand all of the nltk tokens- tokenization

POS Tagging:- POS or Part Of Speech tagging is one of the more powerful aspects of the NLTK module. Using POS tagging, you can easily label words in a sentence as nouns, adjectives, verbs etc. For tagging you need to use PunktSentenceTokenizer from the nltk.tokenize library.

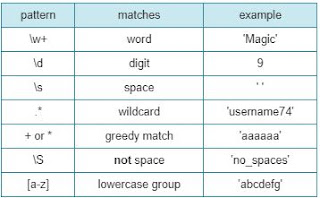

Regex:- A regex or regular expression is a pattern which can match one or more character strings. It allows us to pick off numerous patterns. Some common regex are as below-

We can use regex features by importing Python's library re. The re library has various methods including split, findall, search and match which you can use in finding patterns.

Named Entity Recognition:- NER or Named Entity Recognition is a process which makes a machine to be able to pull out "entities" like people, places, things, locations, monetary figures, and more. NLTK easily recognize all named entities or all named entities as their respective type like people, places, locations, etc.

WordNet:- WordNet is a part of NLTK corpus and a lexical database for the English language. It is used to find the meanings of words, synonyms, antonyms etc. We can also use WordNet to compare the similarity of two words and their tenses.

Feature Extraction:- Feature extraction or Vectorization is a process to encode the words into numerical forms either as integer or in floating point values. This process is required because most of the machine learning algorithms accept only numerical input. The scikit-learn library provides easy-to-use tools for this step like CountVectorizer, TfidfVectorizer and HashingVectorizer. CountVectorizer converts text to word count vectors, TfidfVectorizer converts text to word frequency vectors and HashingVectorizer converts text to unique integers.

That is enough of theory you must know for any NLP task. Now it's time to work on an actual NLP problem where we need to work on text preprocessing before applying any machine learning algorithm. For this exercise we are going to work on a text classification problem where we have a large number of Wikipedia comments which have been labeled by human raters for toxic behavior. This dataset you can download from here . The types of toxicity are:

Here your goal is to create a model which predicts a probability of each type of toxicity for each comment.

Step 1:- Importing required libraries

Step 2:- Reading datasets in Pandas Dataframe

Don't forget to change the path of downloaded dataset here!

Step 3:- Exploring the dataset

Step 4:- Separate the target variable

Step 5:- Perform Feature Extraction using word n grams

Here notice the parameters I am passing in TfidfVectorizer. Each parameter has it's own role and a summary of each one is as below-

Step 6:- Perform Feature Extraction using char n grams

Step 7:- Stack sparse matrices horizontally (column wise)

Step 8:- Train your model and Predict Toxicity on Test data

Here to avoid the overfitting, I am using cross_val_score() function where 'cv' argument is used to determine the cross-validation splitting strategy and 'roc_auc' is the classification scoring metric. You may refer following link to see all metrics- scoring-parameter.

Result:-

Output CSV:-

As a top up I am going to tell you a great visualization technique (WordCloud) to show which words are the most frequent among the given text. First you need to install it using any one of the following commands in anaconda prompt:- conda install -c conda-forge wordcloud or conda install -c conda-forge/label/gcc7 wordcloud. Then you need to import this package with basic visualization libraries as follows-

This wordCloud library requires only one required input and that should be a text. So in our case you can use 'train_text' or 'test_text' as input. Let me show you how you can you use this library-

Here, the argument interpolation="bilinear" in the plt.imshow() is used to make the displayed image appear more smoothly. Try with different ones and see what changes you find! Once you run above function, it will display following beautiful cloud-

Notice in above image, every comment has different size and it is done by the WordCloud purposely. This difference in size tells the frequent occurring words in the train_text data. If you are interested to learn more about WordCloud then please follow this fantastic article- explore wordcloud.

That's it friends for today. Try above exercise in your notebook, read all the links I have shared above, explore more, practice your learning in a new dataset like tweeter sentiment analysis data and don't afraid of failure. Keep trying! With that said my friends- Go chase your dreams, have an awesome day, make every second count and see you later in my next post.

This year also I will continue my posts about my learning and will share with you all so don't stop, stay positive, keep practicing and try again and again :)

In this post I am going to share nuts and bolts of handling a natural language processing (NLP) task which I had learnt while I was working for a Singapore client. In that project I scraped the different e-commerce merchants' product related data through the apis, web scraping and then I performed text cleaning before applying a machine learning algorithm to classify the product categories. Such kind of task you may encounter in your life. So let's starts our journey by first understanding some important fundamentals of NLP.

You must aware of a fact that a word or a sentence has a very strong role in this world; since both can affect a human being in different ways. So teaching a machine to understand a human language is currently a very fast growing field; you can take an example of Amazon Alexa. NLP is everywhere whether it is - translation, named entity recognition, relationship extraction, sentiment analysis, speech recognition or topic segmentation. You can see NLP as a field focused on making sense of language. Let's understand key terms and their meaning before jumping into Python coding part-

Corpus:- Corpus is a large collection of texts. It is a body of written or spoken material upon which a linguistic analysis is based. The plural form of corpus is corpora. There are various in built corpora present in NLTK so in most of your task you just need to download them using the nltk.download() command in your anaconda prompt. You can see a list of in built corpora in this link also.

Lexicon:- Lexicon is a vocabulary, a list of words, a dictionary for example: an English dictionary. In NLTK, any lexicon is considered a corpus since a list of words is also a body of text. For example: To a financial investor, the first meaning for the word "Bull" is someone who is confident about the market, as compared to the common English lexicon, where the first meaning for the word "Bull" is an animal. As such, there is a special lexicon for financial investors, doctors, children, mechanics, and so on.

Stop Words:- StopWords is a list of words those are meaningless or have a very rare contribution in text analysis process. For example many people use 'hmmmm' or 'ummmm' in text messages frequently which don't have any meaning in English grammar so such words should be excluded from the processed text. This can be done easily by accessing it via the NLTK corpus: from nltk.corpus import stopwords.

Stemming:- Stemming is a sort of normalizing method. In documents you may find that many variations of words carry the same meaning, other than when tense is involved. For example sentences- 'I was taking a ride in the car.' and 'I was riding in the car.' have the same meaning and a machine should also understand this diffrentiation thus we do stemming. Most widely used stemming algorithm in NLP is Porter stemmer which you can use as from nltk.stem import PorterStemmer.

Lemmatizing:- Lemmatizing is very similar operation to stemming. The major difference between these is- stemming can often create non-existent words, whereas lemmas are actual words. Lemmatization normally aiming to remove inflectional endings only and to return the base or dictionary form of a word, which is known as the lemma . You can use lemmatizing as WordNetLemmatizer from nltk.stem package.

Tokenization:- Since a machine does not have any understand of alphabets, grammar, punctuation etc; it cannot understand a raw rext. You need to divide a raw text into small chunks or tokens. This text breaking process is known as Tokenization. These tokens may be words or number or punctuation mark. One challenge in this process is that it depends on the type of language thus it generates different result for different languages. For example, languages such as English and French are referred to as space-delimited as most of the words are separated from each other by white spaces. Languages such as Chinese and Thai are referred to as unsegmented as words do not have clear boundaries. In Python the most common and useful toolkit for handling NLP is nltk.

We can tokenize a string to split off punctuation other than periods using the word_tokenize package and we can tokenize a document into sentences using the sent_tokenize package from the nltk.tokenize library. There is also a special class for tokenizing the tweeter's tweets named as TweetTokenizer in nltk.tokenize library. I recommend you to once visit following link to understand all of the nltk tokens- tokenization

POS Tagging:- POS or Part Of Speech tagging is one of the more powerful aspects of the NLTK module. Using POS tagging, you can easily label words in a sentence as nouns, adjectives, verbs etc. For tagging you need to use PunktSentenceTokenizer from the nltk.tokenize library.

Regex:- A regex or regular expression is a pattern which can match one or more character strings. It allows us to pick off numerous patterns. Some common regex are as below-

We can use regex features by importing Python's library re. The re library has various methods including split, findall, search and match which you can use in finding patterns.

Named Entity Recognition:- NER or Named Entity Recognition is a process which makes a machine to be able to pull out "entities" like people, places, things, locations, monetary figures, and more. NLTK easily recognize all named entities or all named entities as their respective type like people, places, locations, etc.

WordNet:- WordNet is a part of NLTK corpus and a lexical database for the English language. It is used to find the meanings of words, synonyms, antonyms etc. We can also use WordNet to compare the similarity of two words and their tenses.

Feature Extraction:- Feature extraction or Vectorization is a process to encode the words into numerical forms either as integer or in floating point values. This process is required because most of the machine learning algorithms accept only numerical input. The scikit-learn library provides easy-to-use tools for this step like CountVectorizer, TfidfVectorizer and HashingVectorizer. CountVectorizer converts text to word count vectors, TfidfVectorizer converts text to word frequency vectors and HashingVectorizer converts text to unique integers.

That is enough of theory you must know for any NLP task. Now it's time to work on an actual NLP problem where we need to work on text preprocessing before applying any machine learning algorithm. For this exercise we are going to work on a text classification problem where we have a large number of Wikipedia comments which have been labeled by human raters for toxic behavior. This dataset you can download from here . The types of toxicity are:

- toxic

- severe_toxic

- obscene

- threat

- insult

- identity_hate

Here your goal is to create a model which predicts a probability of each type of toxicity for each comment.

Step 1:- Importing required libraries

Step 2:- Reading datasets in Pandas Dataframe

Don't forget to change the path of downloaded dataset here!

Step 3:- Exploring the dataset

Step 4:- Separate the target variable

Step 5:- Perform Feature Extraction using word n grams

Here notice the parameters I am passing in TfidfVectorizer. Each parameter has it's own role and a summary of each one is as below-

- sublinear_tf:- It is a boolean flag and if true the it applies sublinear tf scaling, i.e. replace tf with 1 + log(tf).

- strip_accents:- It removes accents and perform other character normalization during the preprocessing step. ‘ascii’ is a fast method that only works on characters that have an direct ASCII mapping. ‘unicode’ is a slightly slower method that works on any characters.

- analyzer:- Whether the feature should be made of word or character n-grams.

- token_pattern:- Regular expression denoting what constitutes a “token”, only used if analyzer ='word'.

- ngram_range:- The lower and upper boundary of the range of n-values for different n-grams to be extracted.

- max_features:- If not None, build a vocabulary that only consider the top max_features ordered by term frequency across the corpus.

Step 6:- Perform Feature Extraction using char n grams

Step 7:- Stack sparse matrices horizontally (column wise)

Step 8:- Train your model and Predict Toxicity on Test data

Here to avoid the overfitting, I am using cross_val_score() function where 'cv' argument is used to determine the cross-validation splitting strategy and 'roc_auc' is the classification scoring metric. You may refer following link to see all metrics- scoring-parameter.

Result:-

Output CSV:-

As a top up I am going to tell you a great visualization technique (WordCloud) to show which words are the most frequent among the given text. First you need to install it using any one of the following commands in anaconda prompt:- conda install -c conda-forge wordcloud or conda install -c conda-forge/label/gcc7 wordcloud. Then you need to import this package with basic visualization libraries as follows-

This wordCloud library requires only one required input and that should be a text. So in our case you can use 'train_text' or 'test_text' as input. Let me show you how you can you use this library-

Notice in above image, every comment has different size and it is done by the WordCloud purposely. This difference in size tells the frequent occurring words in the train_text data. If you are interested to learn more about WordCloud then please follow this fantastic article- explore wordcloud.

That's it friends for today. Try above exercise in your notebook, read all the links I have shared above, explore more, practice your learning in a new dataset like tweeter sentiment analysis data and don't afraid of failure. Keep trying! With that said my friends- Go chase your dreams, have an awesome day, make every second count and see you later in my next post.

Well written post with worthy information. It will definitely be helpful for all. Do post more like this.

ReplyDeleteccna Training in Chennai

ccna institute in Chennai

R Programming Training in Chennai

Python Classes in Chennai

Best Python Training in Chennai

CCNA Training in Anna Nagar

CCNA Training in Porur

Nice article. I liked very much. All the information given by you are really helpful for my research. keep on posting your views.

ReplyDeletedata science courses in malaysia

It is very useful for me. Thanks...

ReplyDeleteAzure Data Factory Online Training

Azure Data Factory Online Training in Hyderabad