Another post starts with you beautiful people!

In my last post we have built a digit recognition deep learning model using Keras and achieved 97% of accuracy. If you have just started your deep learning journey; I recommend you to visit my last three posts before starting this post. Keras provides various deep learning models like VGG16, ResNet50, NASNet etc with pre-trained weights which can be used as a base model for your work. You can easily fine tune them as per your requirement without writing a code from scratch. You can find all the details of these model in this link . These models' weights are pre-trained on the ImageNet dataset. So you can use these models in your image classification task and they can recognize more than 1,000 common object classes out-of-the-box.

In this post I am going to share how to use one of these models to identify an object from the given image and then deploy this model as a Rest API using flask framework. You will also learn how to consume this api with your python code. This knowledge will definitely help you when you will work as a Data Scientist in a team and you will need to integrate your model with any front-end framework. The Rest API is a industry standard solution to make interaction between two resource built on different languages or platform. For example you have created a machine learning model for a product recommendation engine in Python and your client's application is built on a Java framework as a back end and Angular as a front end then how will a front end developer will integrate Python and Java logic in the front end? This is one simple example of solving such cross platform interaction issue with Rest API. You can find example of this post in the official keras blog also which I also followed but found some issue while running the example in my local machine so thought to share a complete project with you all. So let's start our learning by following below steps-

- Open your PyCharm IDE and create a new project. Give any name to your project (I have given name 'Keras-Model-as-Rest-API') and save it with default settings.

- In the project right click and go to New, then select Python File and named this file as 'run_keras_server.py'.

In this python file we will first import required packages for our image classification model like below-

You must see red line errors in PyCharm but for now you can ignore such errors. These errors are there because PyCharm interpreter does not have pre installed these packages.

In the first three lines we have imported libraries of keras.applications api. The first one ResNet50 is a model with pre-installed weights. This model is trained on Imagenet validation dataset and has got 75% Top-1 accuracy and 92% Top-5 accuracy. The default input size for this model is 224 x 224. The second import is for converting a PIL image object to numpy array. Third import is used for preprocessing the input image. Rest all imports are some basic libraries.

After this import we will initialize our flask application and our keras model as below-

Now we will write three functions for following three purposes:- for loading our trained Keras model and prepare it for inference, for prepossessing an input image prior to passing it through our network for prediction and for classifying the incoming data from the request and return the results to the client. In our first function load_model(), we will initialize the ResNet50 model and store it in a global variable so that we can use it later as below-

Above function is handy for you, you can replace it with your own model also. Next, in second function prepare_image(), we will put image conversion logic like we will convert our image into RGB format if not and then preprocess it using imagenet_utils.preprocess_input() function like below-

Next, in our third function predict() function we will write our logic to ensure that image is properly uploaded in the endpoint of our api, then we will read the uploaded image and will transform it in the required input format of our Resnet50 model. Next, we will make prediction on this transformed image then we will decode our prediction using imagenet_utils.decode_predictions() function so that we can return it to the client. And finally we will return the response as JSON format which is the most used format of any Rest API like below-

In above code, notice we have used @app.route("/predict", methods=["POST"]) just above our predict() function. Here route() is a decorator of the Flask class, which tells the application which URL should call the associated function. We have bound this function with "/predict", it means if a user visits http://localhost:5000/predict URL, the output of the predict() function will be rendered in the browser. We have also defined post as a method in this function which is used to send data to the server.

In the last of this file we will create a main function to run our load_model() function as a main function and then start the flask server like below-

That's it you written your keras model as a Rest API. But how will anyone consume this API? For this purpose we will create a new python file in the same project and named it as 'simple_request.py'. In this file we will first import the requests package. Next, we will define two variables to initialize the Keras REST API endpoint URL along with the input image path. Then we will load the input image and construct the payload for the request. Next, we will submit pour request as a POST request in json format and check if the classification is a success (or not) and then loop over it like below-

Till now you have completed your API work. Now you will learn to prepare your project as a standard one so that you can easily push your code later in github repository. For this purpose create a requirements.txt file in your project and put following lines in this file-

This file is useful to install required packages in a virtual environment or any other environment except your local ones. We will use this file later in this post so don't worry! Now add another file named as .gitignore with following content-

This file prevents us from accidently pushing not required files in github repository. Next, you can also add a READ.MD file where you can define the description of this project. Once you follow above steps your project will look like below-

Here dog.jpg and panther.jpg are some sample images which I have put for testing purpose. You can use another images. If you face any issue you can find the complete project in my My Github Url. If you like my work then don't forget to click on the Star icon in my github repository before cloning it :)

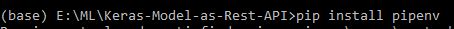

Now since everything you have setup, it's time to run our files. For running our first file run_keras_server.py, open anaconda prompt and go to the directory of the project and then run following three commands one by one-

pip install pipenv command is used to create a virtual environment where you will install required packages. This step is a good step and saves your Python installed folders from corrupting. pipenv install command install the virtual environment you have created and then pipenv shell command activates this environment. After this you need to install required packages and for this purpose we use our requirements.txt file. You need to run following command pip install -r requirements.txt and press yes. This will install all packages; if you find any module not found package then install that package using pip install <package name> command. After this run ypur script with command python run_keras_server.py and wait for sometime to start your flask server-

Once the server is started, open another anaconda prompt and go to the same project directory and run command python simple_request.py

After sometime you will see prediction as output of your input image which you can change in simple_request.py file in IMAGE_PATH variable.

That's it for today. Try above exercise with other pre trained keras model or even with your own model and deploy it as an API. This is a simple example of deployment and can be used in development environment to show case your model within your team. For making it production ready lots of other work required which I will share later. Till then Go chase your dreams, have an awesome day, make every second count and see you later in my next post.

Awesome post. After reading your blog I am happy that i got to know new ideas; thanks for sharing this content.

ReplyDeleteSpoken English Class in Thiruvanmiyur

Spoken English Classes in Adyar

Spoken English Classes in T-Nagar

Spoken English Classes in Vadapalani

Spoken English Classes in Porur

Spoken English Classes in Anna Nagar

Spoken English Classes in Chennai Anna Nagar

Spoken English Classes in Perambur

Spoken English Classes in Anna Nagar West

Your blog has very useful information about this technology which i am searching now, i am eagerly waiting to see your next post as soon

ReplyDeleteData science training in chennai

Data science course in chennai

Data science training in Anna nagar

Data science training in Adyar

Data science training in T Nagar

Cloud computing courses in chennai

Cloud computing training in chennai

Cloud computing training in Tambaram

Hello, you are sharing nice post about this technology and that is knowledgeable for me. Thanks

ReplyDelete